Turn Web Data Into Powerful Workflows: Firecrawl 🤝Activepieces

We’re excited to showcase our powerful integration with Firecrawl. Firecrawl, is a blazing-fast, AI-native web scraping and crawling platform. When paired with Activepieces, Firecrawl transforms raw web data into actionable insights, no code needed.

Together, Firecrawl and Activepieces make it effortless to automate tasks like monitoring competitors, gathering fresh industry news, extracting structured data from any website, and more.

What is Firecrawl?

Firecrawl is an AI-powered web crawling and scraping tool designed specifically to convert websites into clean, structured, and LLM-ready data, such as markdown or JSON formats. It allows developers to reliably extract data from single URLs or entire websites with a single API call, handling complexities like JavaScript rendering, proxies, caching, rate limits, and dynamic content. This makes it highly suitable for AI applications, especially for training large language models (LLMs),

What is Possible With Firecrawl On Activepieces

Firecrawl Action

- Scrape Website

Scrape a website by performing a series of actions like clicking, typing, taking screenshots, and extracting data.

- Crawl Results

Get the results of a crawl job.

- Start Crawl

Start crawling multiple pages from a website based on specified rules and patterns.

- Custom API Call

Make a custom API call to a specific endpoint

💡Use Case Spotlight

In this section, we are going to explore 2 powerful use cases of Firecrawl and Activepieces

1. Competitor Pricing Change Alert

This automation flow runs weekly and tracks competitor pricing changes. It retrieves the latest competitor pricing data from a Google Sheet, scrapes current prices, and uses AI to compare the old and new prices. If a change is detected, the flow updates the Google Sheet and sends an email notification to keep you informed of any pricing updates.

Here is the link to the flow template: https://cloud.activepieces.com/templates/2RrncY4W3FaRBsNVCZVgG

Now let's build the flow

Prerequisites

- Activepieces Account

- Firecrawl Account

- Firecrawl API key: When you sign up for Firecrawl, click on the Dashboard button, and you will find your API key on the dashboard page.

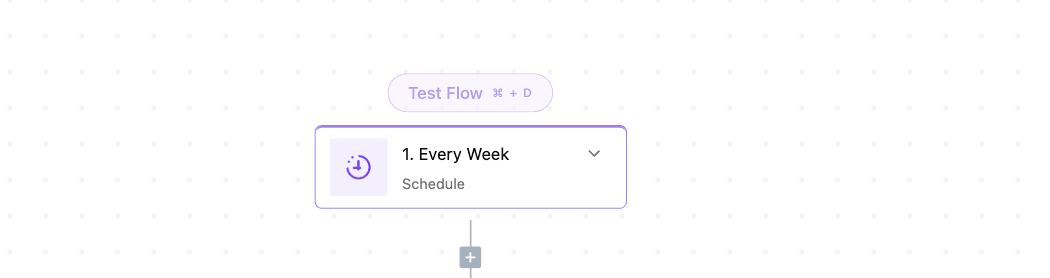

Step 1: Add your trigger

The trigger for this workflow is a schedule set to Every Week

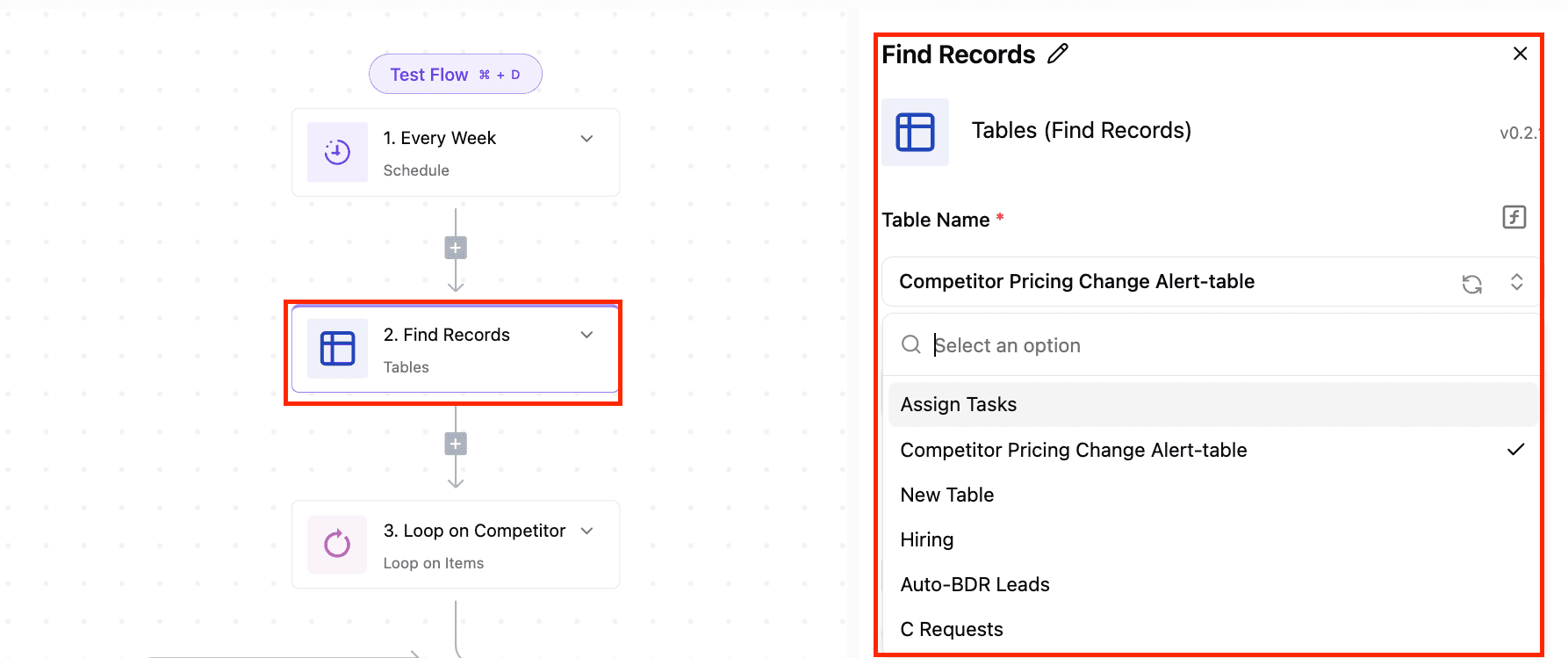

Step 2: Get Competitor Pricing From Activepieces tables

This step helps us to retrieve competitor pricing from a your Activepieces tables

- Select the Find records action from the Activepieces tables piece

- Select your desired table name

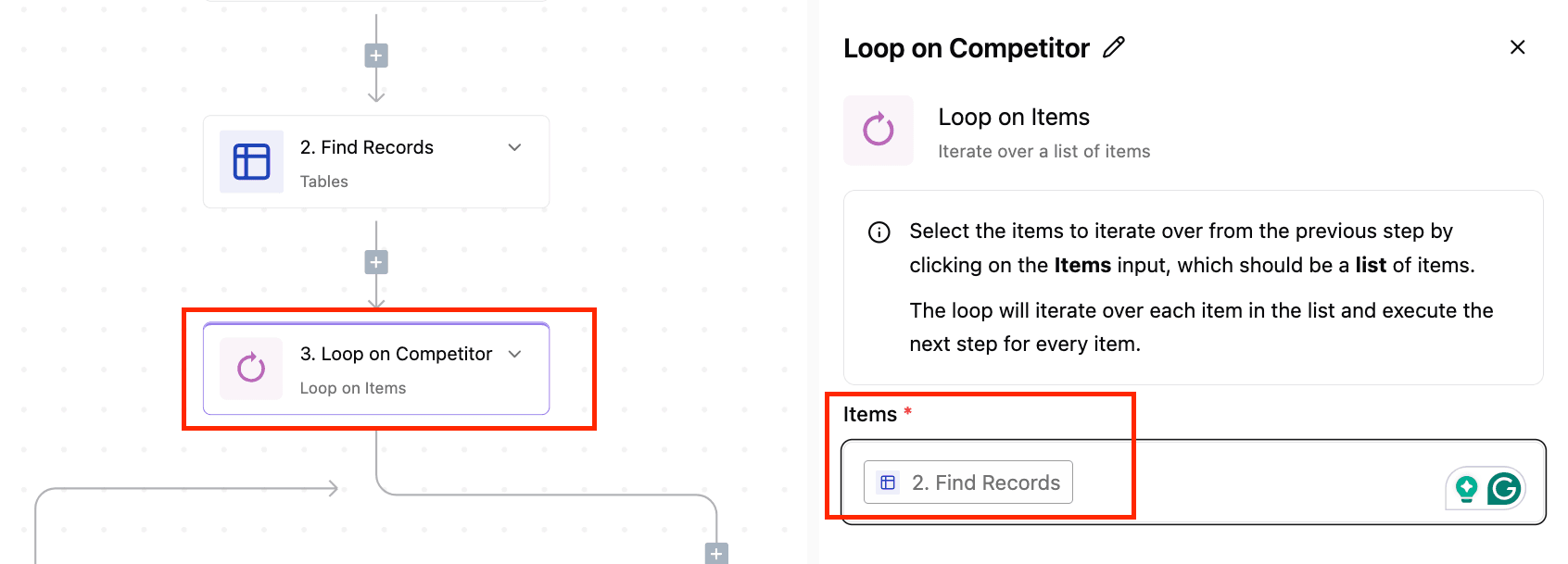

Step 3: Loop on Competitor pricing

Since this automation is retrieving a set of competitor prices, we need to take action on each of the data points retrieved, one at a time. That is what the loop step does

So for this step, I am passing in the sheet data to be looped over

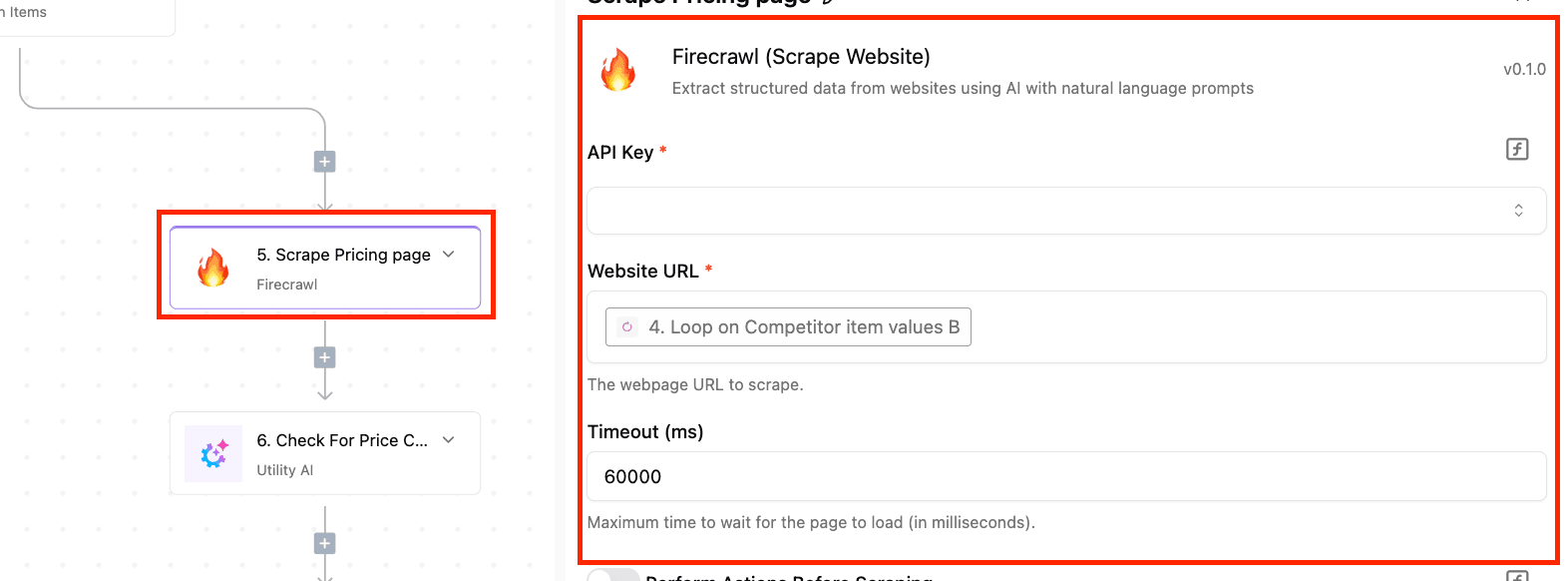

Step 4: Scrape Competitor Pricing Page With Firecrawl

This is where we scrape each competitor's pricing page with Firecrawl

- Enter your Firecrawl API key

- Paste your website URL

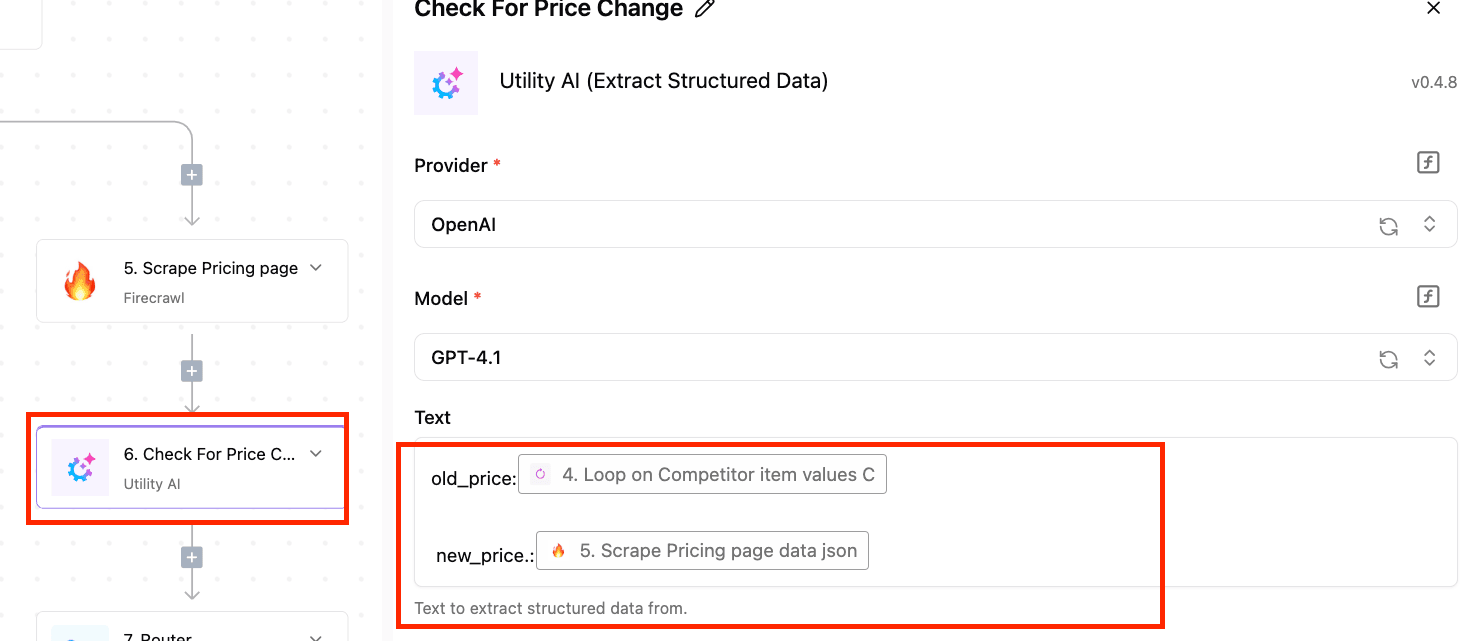

Step 5: Extract Structure data using Utility AI

- Select the Extract Structured Data action from the Utility AI piece, which is a native AI piece on Activepieces.

- Select your provider, model, and the text you want to extract data from (Which is the scraped data from Firecrawl and the Old price from the Google sheet)

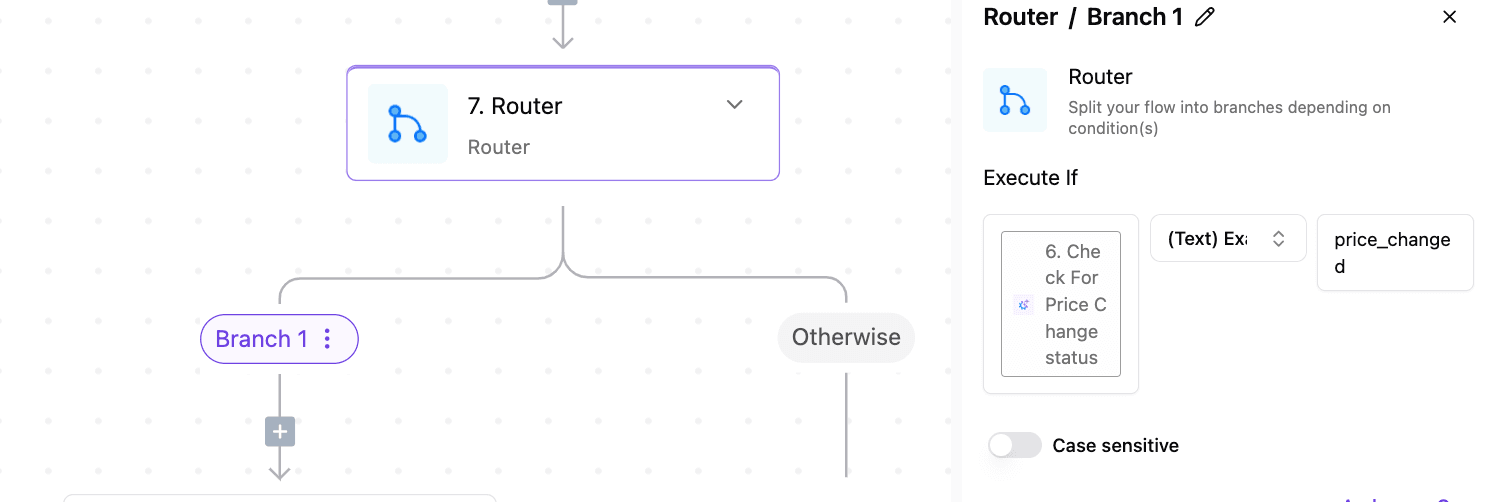

Step 6: Add Router

This step is used to carry out different actions depending on whether there is a price change or not

Branch 1 is used to check if there is a price change. If there is, the next set of actions will be executed.

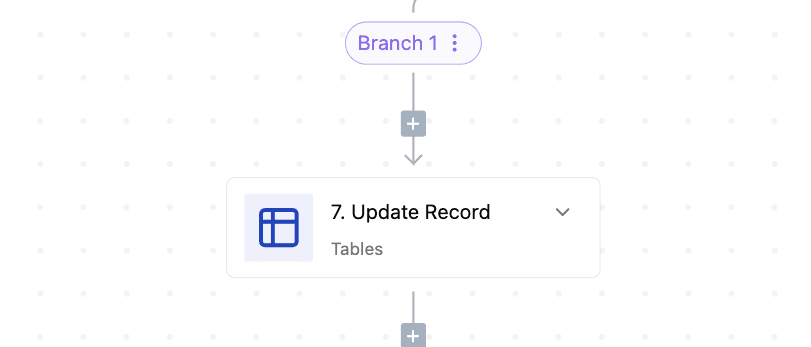

Step 7: Update the sheet

In this step, the sheet will be updated to the new price if there is a price change

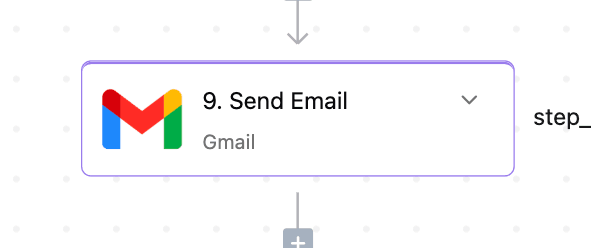

Step 8: Send Email

After the sheet is updated, an email will be sent to the person in charge of monitoring the price changes so that quick actions can be taken

2. Industry News

This daily automation gathers the latest industry news by scraping a specified news website. It starts by getting the current date and then extracts all article URLs from the news feed. It loops through each article, checks if it was published today, and if so, scrapes the content and generates a summary. All the summaries are then compiled and sent via email, keeping you up-to-date with the latest industry developments.

Here is the link to the flow template: https://cloud.activepieces.com/templates/MGiKJxnywTm4vx9i0aBG2

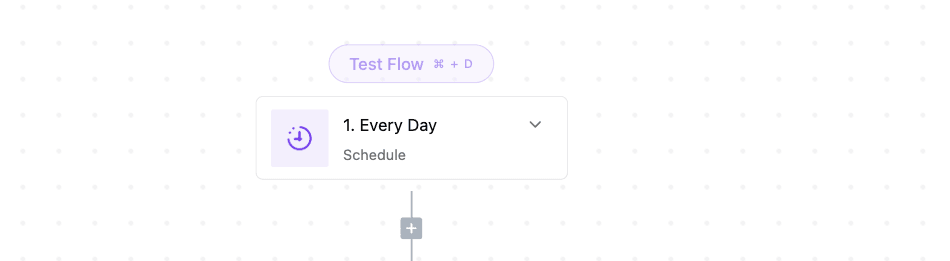

Step 1: Add your trigger

For this automation, the trigger is a schedule set to Everyday

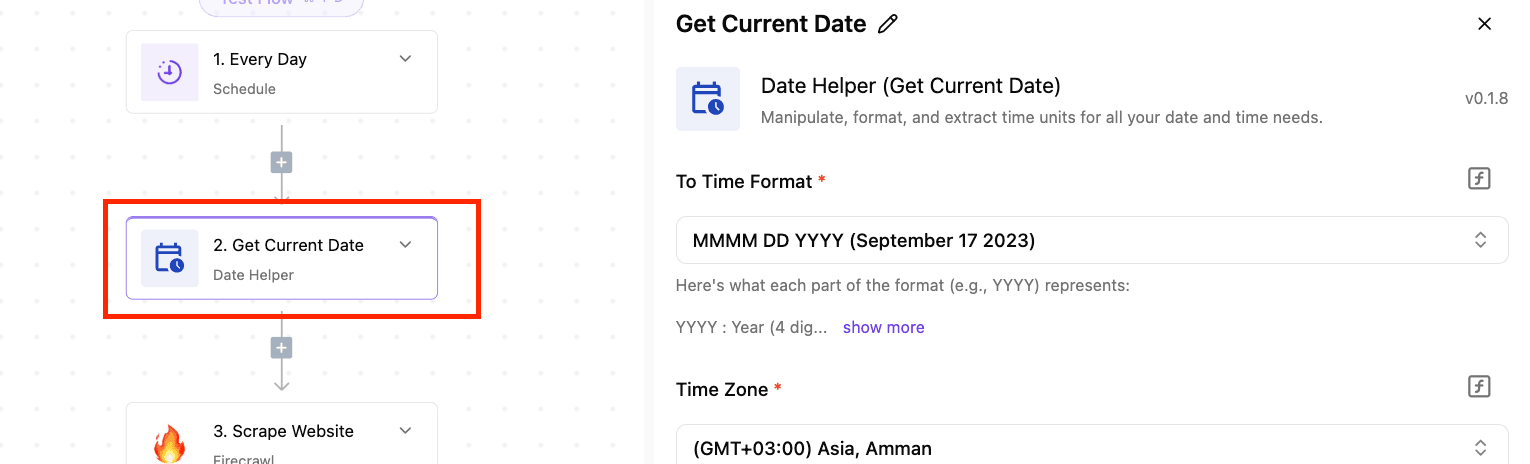

Step 2: Get the current date

We need this step to help ensure that we get the current news from our target website

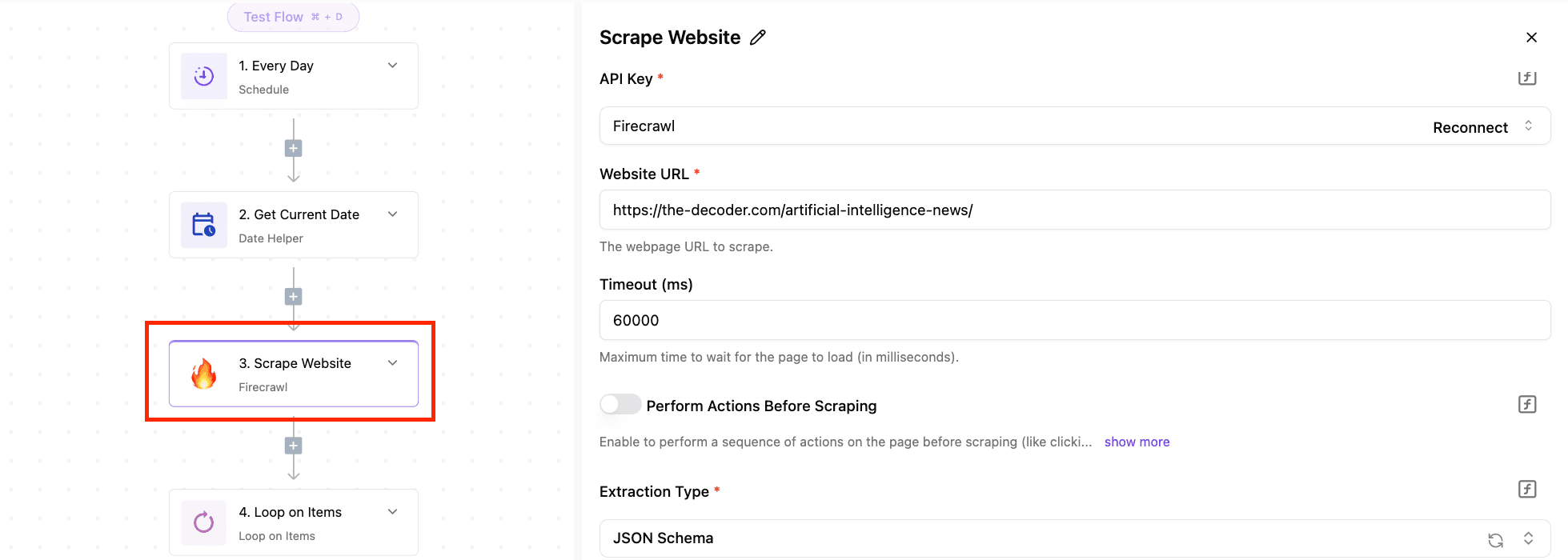

Step 3: Scrape Website with Firecrawl

This step enables you to scrape articles from the article page

Here, you need to enter your API key and the website URL

Select JSON schema for the extraction type so that this step returns structured data

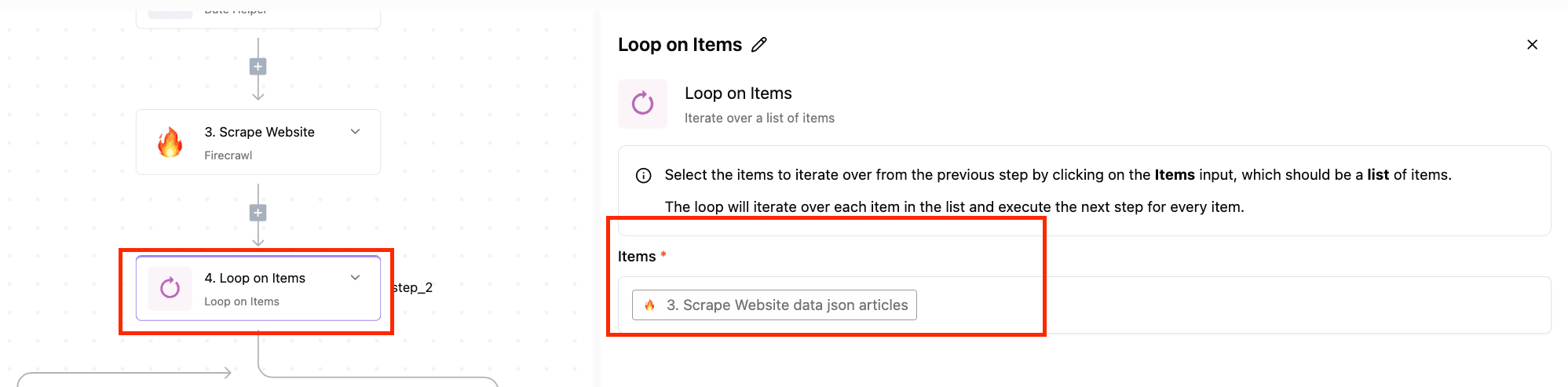

Step 4: Loop

This step helps you to take action on each data returned from the previous step

The next set of actions will happen inside the loop

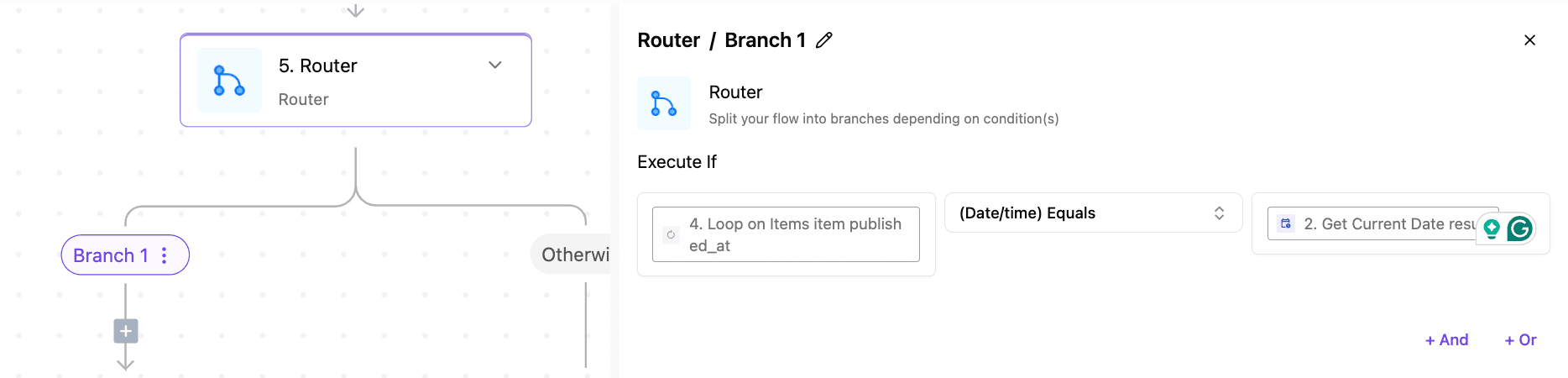

Step 5: Add Router

This step helps you take different actions based on certain conditions

Branch 1 is used to check if the new content from the website is from the current day

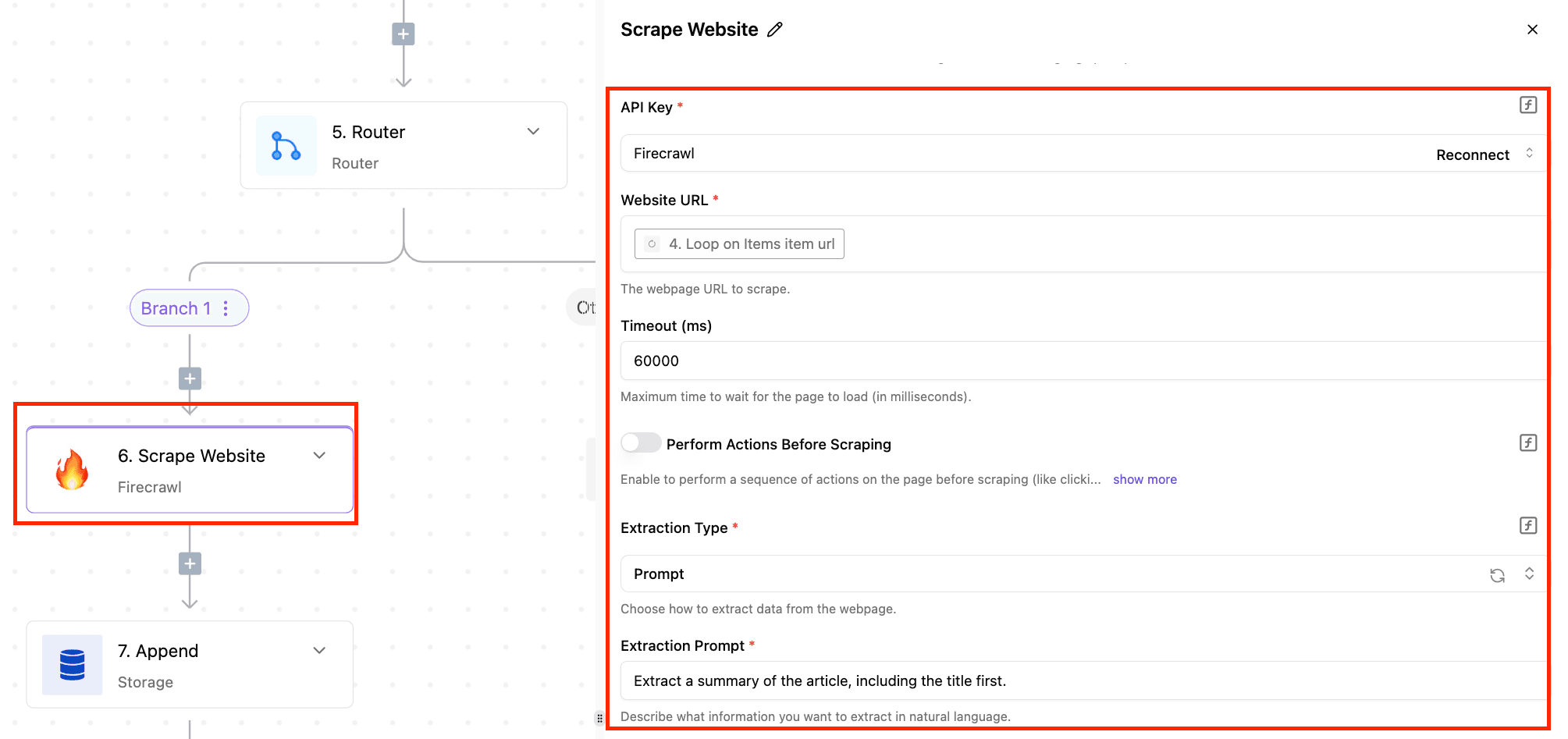

Step 6: Scrape Website From Firecrawl

If the scraped data is from the current date, this step goes into the specific article and scrapes the main article content.

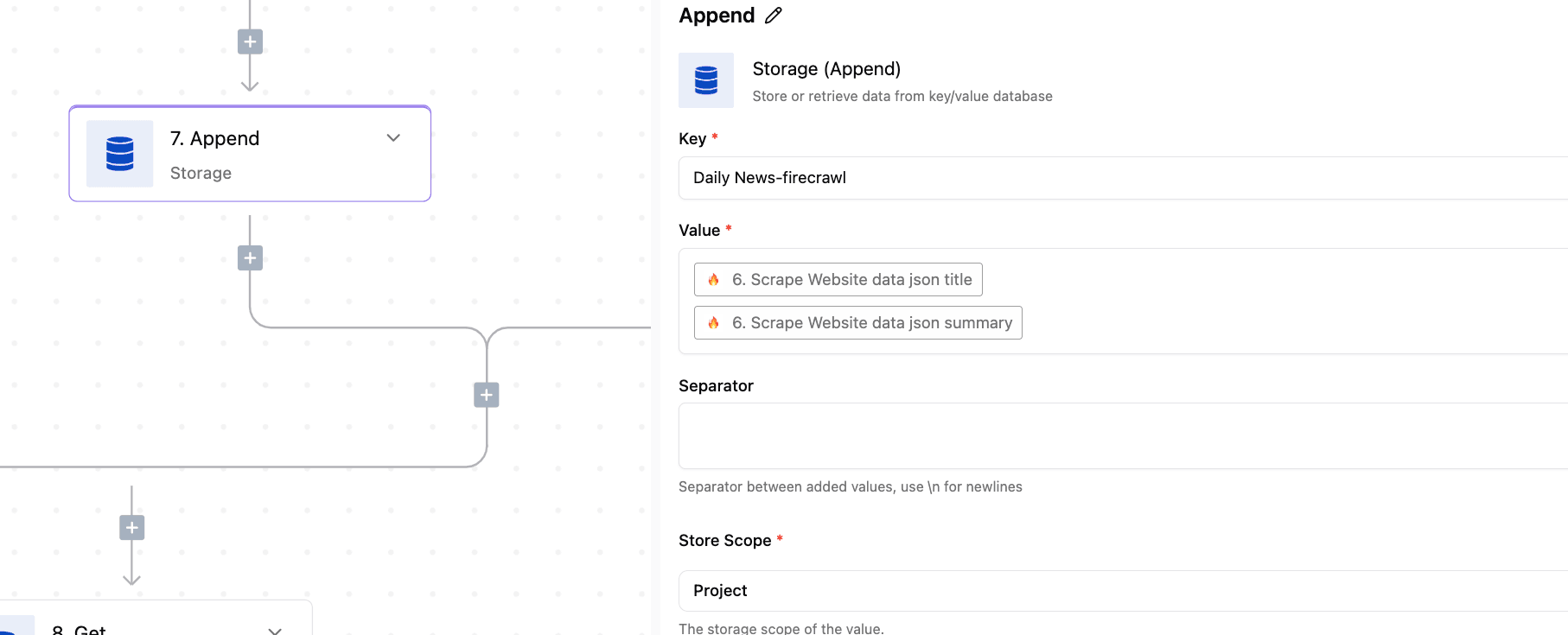

Step 7: Append data to storage

This helps you to store the scraped data to be used in the later parts of the automation

The next set of steps will happen outside the loop

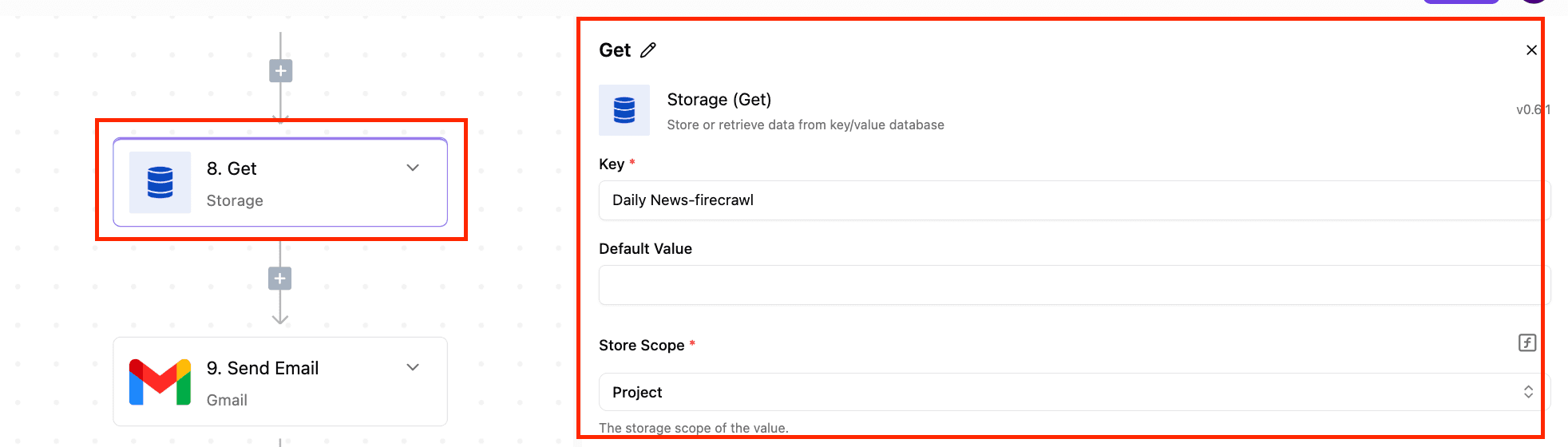

Step 8: Get data from storage

This step helps us to retrieve the data that we stored from the loop

Step 9: Send Email

Once the data is retrieved from the storage, we want to send it as an email to the person in charge

Step 10: Remove storage

This step helps us to remove the data from storage

📌 What’s Next?

- Start building: You can explore the Firecrawl integration directly in Activepieces.

- Follow us on social for use cases, tutorials, and tips. LinkedIn: Firecrawl | Activepieces Twitter:Firecrawl | Activepieces